Headless CMS with AEM: A Complete Guide

You might have already heard about Headless CMS and you may be wondering if you should go “all-in” with this new model.

In our complete guide, we are going to answer the most common questions, such as

- What is the difference between Headless and traditional CMS?

- Is headless the best choice for your next website implementation?

- How to use a headless CMS for your next project

It’s best to understand what Headless CMS means before making any decision to start developing your next web project on a content delivery model that won’t fit.

At One Inside, our expertise relies on the implementation of the Adobe CMS, Adobe Experience Manager (AEM). We can show you what AEM can do in regards to content delivery — and in which case headless is recommended.

What is a traditional CMS?

This is likely the one you are familiar with. Traditional CMS uses a “server-side” approach to deliver content to the web.

The main characteristics of a traditional CMS are:

- Authors generate content with WYSIWYG editors and use predefined templates.

- HTML is rendered on the server

- Static HTML is then cached and delivered

- The management of the content and the publication and rendering of it are tightly coupled

Let’s define what a headless CMS is now.

What is a headless CMS?

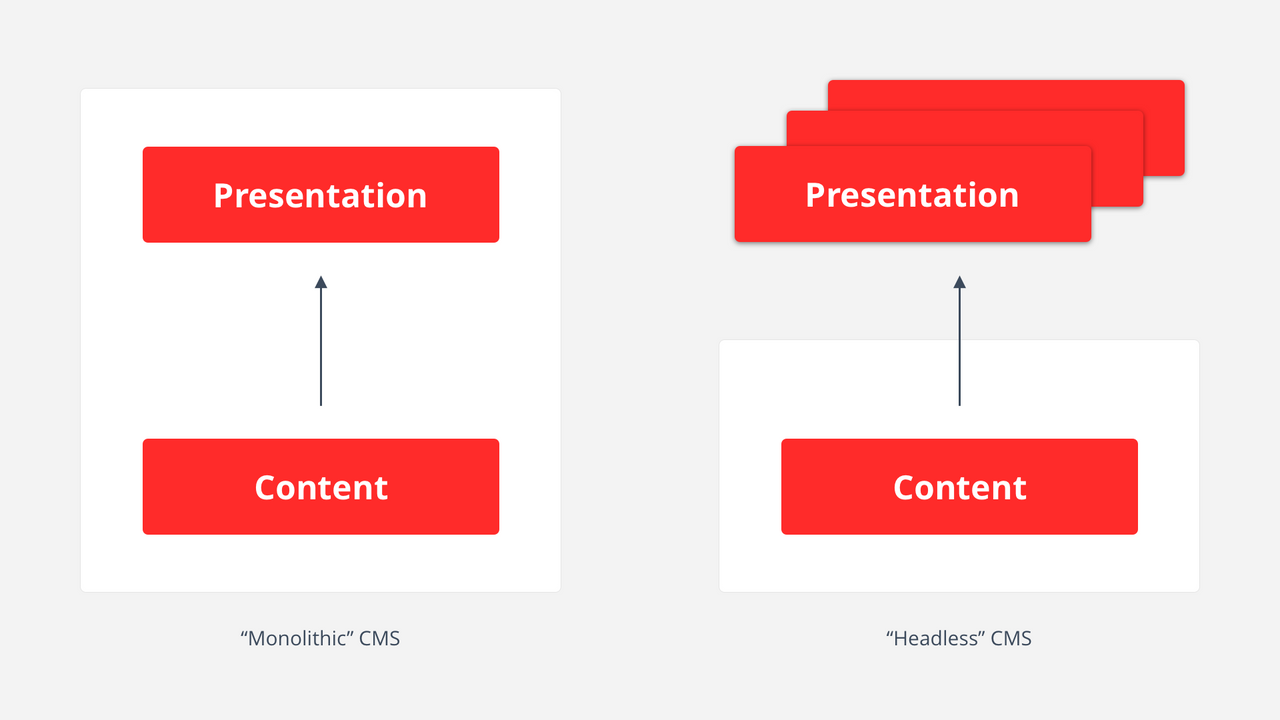

A headless CMS decouples the management of the content from its presentation completely. Headless CMS can also be called an API-first content platform.

The authors create content in the backend, often without a WYSIWYG editor. The content created is not linked to a predefined template, meaning the author cannot preview the content.

The content is then distributed via an API.

The presentation of the content on the website, mobile app, or any other channels, is done independently. Each channel fetches the content and defines the presentation logic.

A headless CMS is mainly made of

- A backend to create structured forms of content

- An API to distribute content

Let’s speak about the last category of CMS supporting both traditional and headless.

What is a hybrid CMS?

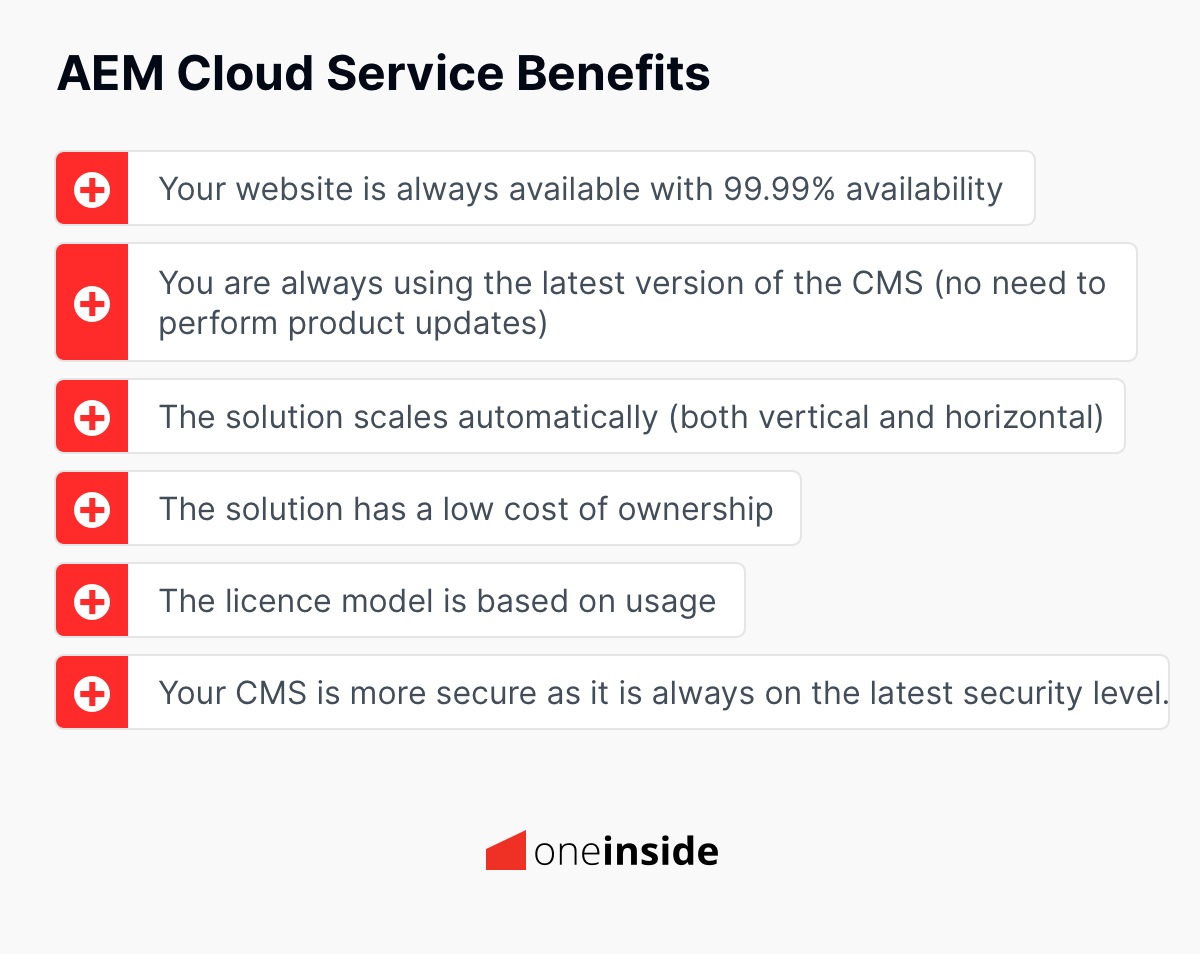

A Hybrid CMS is a CMS supporting both content delivery models: the Headless and the traditional.

You can create content in a classic way, by creating a page in the backend, previewing the page, and publishing it.

On the other hand, the content created can be distributed via an API as well.

This hybrid approach is offered by the traditional CMS. It’s straightforward to deliver the content from their repository via API.

With a hybrid approach you get the best of both worlds:

- Single source for content and assets

- Multichannel delivery

- Authors only need to learn one tool for all content authoring

- The administration is simplified (one login, one server, one technology for content etc)

Is AEM a Headless CMS?

Yes, with Adobe Experience Manager you can create content in a headless fashion.

The content can be fully decoupled from the presentation layer and served via an API to any channels.

You might know that AEM offers a great interface for authors enabling them to create content by using predefined templates and web components.

The content can be organised hierarchically and published immediately to websites or any other channels.

As AEM offers the (very) best of both worlds, it supports the traditional approach and the headless way. AEM is considered a Hybrid CMS.

The Headless features of AEM go far beyond what “traditional” Headless CMS can offer.

How does AEM work in headless mode for SPAs?

Since version 6.4, AEM supports the Single Page Application (SPA) paradigm with the SPA Editor.

This enables content authors to build dynamic as well as content-focused applications as they are used to when working with creating pages.

SPAs are currently mostly used for static applications.

Enabling dynamic page creation, layouts and components in a SPA with a visual content editor shows how valuable AEM’s Hybrid CMS approach is.

With the SPA Editor 2.0 it’s possible to only deliver content to specific areas or snippets in the app.

We are going to look into several aspects of how AEM implements the headless CMS approach:

- What is the difference between rendering HTML in the backend vs SPA?

- How does the SPA WYSIWYG content editor work?

- What are Content APIs?

- How to develop SPAs with AEM

- What is Server Side Rendering (SSR)?

- How to use Content Fragments and the GraphQL API?

We will use the technical insight gained in this section to conclude what the pros and cons of SPAs with AEM are and in which cases this approach is best.

Rendering HTML in the backend vs Single Page Application

Traditionally, the HTML of a web page is rendered by a backend server.

The browser loads the HTML and linked resources. Javascript is then used to enhance the user experience with dynamic functionality.

When a user navigates to another page, this one loads again and the process is repeated.

This approach works well for simpler and static pages. However, websites have become more and more complex and feature rich.

Websites now often behave like full-fledged applications such as a social media platform or a banking portal.

The complex interactions, state management and consumptions of many APIs makes it difficult to develop and maintain frontend code.

That is why the Single Page Application method of developing dynamic web pages gained a lot of traction in the last decade. The responsibility of the view layer is shifted to the location where it is displayed: the browser.

With the SPA approach, the first page loads an empty HTML and Javascript / CSS. Javascript then dynamically assembles the webpage.

This is a simplified example of a HTML delivered by a SPA:

<head>

<source src="/spa/main.js">

<link rel="stylesheet" href="/spa/style.css">

</head>

<body>

<div id="app"></div>

</body>

Any additional data or content like text, products, user account info, images etc. are requested from backend APIs.

If the user navigates to a different link, only the content area is replaced and re-rendered, the rest of the UI does not change. This makes the website feel like an application and not necessarily like a website.

The SPA WYSIWYG content editor

The AEM “what you see is what you get” editor was extended to support SPAs.

Seeing the content created directly in the app is a blessing for anyone who has worked with a form-based editor (of a traditional Headless CMS).

Even better, an author that is familiar with AEM will immediately feel at home and be able to create content without learning a new tool – while also reusing any other AEM content or assets from the traditional web page.

How did Adobe implement this? Here’s an overview of the main elements:

If this all looks very familiar to you – it is! Except for step 3, 7 and 8, it’s all the same as with a backend rendered page. You can find a more detailed view of this here.

Content APIs

We referred to Content APIs, also called AEM Content Services, a couple of times already.

What are they and how do they work?

AEM is built on the RESTful Sling framework. Architecturally, the visualisation layer is already completely decoupled from the data through the Java Content Repository. So in this regard, AEM already was a Headless CMS.

This shows that on any AEM page you can change the extension from .html with .json (or .infinity.json to be more correct) and AEM will return all the content for the request page. If you currently use AEM, check the sidenote below.

For this request AEM will return the raw data stored in the repository for the requested path. Even though this could be considered “Content as an API”, there are several issues with this approach:

- The format of the data is unstructured

- It’s difficult to work with for clients

- Contains unneeded or unwanted data like the username of the author

- Not stable in regards to changes

- Paths will not be externalised

- It is not possible to inject further logic, like resolving additional information for an image path

Therefore an additional layer was introduced called Sling Exporter Framework.

It allows us to easily define how existing Sling Models should be transformed and serialised to certain data formats like JSON or XML. It basically is the mapping for your data to the exposed data in the API.

Since Sling Models are already the basis of any modern AEM project this makes it straightforward to provide a transformation of the web content to JSON.

Adjusting existing Sling Models to support the Sling Exporter Framework usually just requires a single line:

@Model(adaptables = SlingHttpServletRequest.class)

@Exporter(name = "jackson", extensions = "json")

public class Text {

@ValueMapValue

@Getter

private String text;

@ValueMapValue

@Getter

private boolean isRichText;

}

If this component is rendered with the selector .model., the following json will be generated by the exporter framework:

{

"id": "text-2d9d50c5a7",

"text": "<p>Lorem ipsum dolor sit amet.</p>",

"richText": true,

":type": ".../components/content/text"

}

Actual examples of how the data would be transformed can be found on the core components dev page.

In the example of the image component you can note the following:

- User data is not exposed in the JSON (red)

- Path is rewritten (yellow)

- Models can be automatically enhanced with auxiliary data useful for clients (green).

In this case, AEM Core Components inject the required fields for Adobe Analytics with the Standardized Datalayer for modern Event-driver tracking.

With the corresponding Adobe Launch Extension this enables zero configuration Adobe Analytics Integration for Core Components.

Repository Data:

jcr:primaryType: nt:unstructured

jcr:createdBy: admin

fileReference: /content/dam/core-components-examples/library/sample-assets/lava-into-ocean.jpg

jcr:lastModifiedBy: admin

jcr:created:

displayPopupTitle: true

jcr:lastModified:

titleValueFromDAM: true

sling:resourceType: core-components-examples/components/image

isDecorative: false

altValueFromDAM: true

JSON Exported Sling Model:

{

"id": "image-f4b958f398",

"alt": "Lava flowing into the ocean",

"title": "Lava flowing into the ocean",

"src": "/content/core-components-examples/library/page-authoring/image/_jcr_content/root/responsivegrid/demo_554582955/component/image.coreimg.jpeg/1550672497829/lava-into-ocean.jpeg",

"srcUriTemplate": "/content/core-components-examples/library/page-authoring/image/_jcr_content/root/responsivegrid/demo_554582955/component/image.coreimg{.width}.jpeg/1550672497829/lava-into-ocean.jpeg",

"areas": [],

"lazyThreshold": 0,

"dmImage": false,

"uuid": "0f54e1b5-535b-45f7-a46b-35abb19dd6bc",

"widths": [],

"lazyEnabled": false,

":type": "core-components-examples/components/image",

"dataLayer": {

"image-f4b958f398": {

"@type": "core-components-examples/components/image",

"repo:modifyDate": "2019-01-22T17:31:15Z",

"dc:title": "Lava flowing into the ocean",

"image": {

"repo:id": "0f54e1b5-535b-45f7-a46b-35abb19dd6bc",

"repo:modifyDate": "2019-02-20T14:21:37Z",

"@type": "image/jpeg",

"repo:path": "/content/dam/core-components-examples/library/sample-assets/lava-into-ocean.jpg",

"xdm:tags": [],

"xdm:smartTags": {}

}

}

}

}

A complete example of a content structure which supports the Sling Exporter Framework might look like this:

Pages with their properties, editable template structure for static components, responsive grid (“parsys”) components and the content of the components – basically all information which describes the content of the page – are exported in a well-defined consistent API intended for clients to be consumed and rendered.

Sidenote for AEM users

Are you using AEM for your website?

If so, try replacing the .html with .json. Do you get any JSON back? If yes, you can try to add another childpath named “jcr:content” before .json: /de/home.html -> /de/home/jcr:content.json.

If any json is returned you will probably also see some technical metadata and a username. Depending how users are created, this may be a cryptic number, but it could also be a readable name or an email address.

In any case, you might want to discuss this with your team. Adobe recommends disabling the default Sling GET servlet on the productive publish instances.

Benefits of developing Single Page Application with AEM

Adobe put a lot of effort into making it as simple as possible to get up and running and develop SPAs with AEM.

All the mechanisms are also tightly integrated into existing AEM technology, making SPAs a first-class citizen in AEM.

In the following, we will give a small overview of how the setup looks to give some further insights on what impact this has on developer teams working with AEM.

Setup & Onboarding

Adobe provides a reference implementation called Core Components with a large set of components containing all the current best practices in regards to AEM development (SPA or non-SPA).

The projects can be locally set up with the project templating tool AEM Maven Archetype for on premise or AEM as a Cloud Service installation. It supports creating a React or Angular SPA project template with the following:

- AEM base setup

- Core Components

- Setup for Sling Exporter Framework

- A frontend build chain that builds and deploys all assets directly into AEM

- Angular / React libraries for the AEM integration

- A static preview server for local, AEM-independent frontend development

Further, there is a very good starting tutorial for React and Angular that will get developers up and running quickly.

Of course, this doesn’t mean that a developer is ready to deliver production-ready AEM SPAs solutions the next day, but it’s good to know that Adobe is committed to simplifying onboarding of developers.

AEM SPA Backend Development

AEM Backend Developers will have less work to do, because they no longer have to integrate the frontend and take the HTML and migrate it to HTML. This usually is a big pain point of any larger AEM project introducing bugs and costing time.

With the SPA approach, the interface between backend and frontend is no longer the HTML markup but instead the Content API, which can be predefined, specified and more easily adjusted.

Further, the responsibility of rendering the UI in the browser goes to the frontend team where usually the expertise in these areas lie anyway.

AEM developers can focus on what they know best: building the solid backbone of the application and ensuring content authors have the required tools and enjoyable interfaces to create content.

AEM SPA Frontend Setup

In AEM projects, frontend developers usually build a static prototype with a set of static components which are handed to the backend.

This is, as mentioned, usually a very inefficient process. It is hard to tackle this problem without requiring frontend developers to install AEM, which comes with its own set of problems.

Therefore teams just have to accept this aspect of AEM development. This is restrictive for frontend developers to build great user experiences.

With the SPA approach, frontend developers get the full power and responsibility of the frontend – without having to know a lot about or install AEM.

This is all due to the fact that the only communication with the backend is via the Content API and the clear separation of providing data and the presentation layer.

We know how Content APIs deliver the content. But how are they consumed from the frontend? There are three setups, each valuable depending on the context.

JSON Mock

A JSON mock file is basically a copy of an example output directly from AEM Content API.

The frontend developer can adjust this file, switch content, add components and more. They can simulate authoring in AEM by editing a file, as long as they move within the specification of the Content API.

Point to remote AEM

The frontend setup can be configured to point to a certain remote AEM Instance like QA, pre-production or even production! Gone are the days where frontend had to struggle to reproduce an issue in their local environment.

This enables many more possibilities, like running a whole integration test suite in the frontend on productive content without having to move large amounts of data to a different stage.

Running as part of the AEM installation

As might be expected, the frontend can be deployed to an AEM instance.

It is important to locally test the integration with the authoring environment and the SPA editor during development.

This part could also be taken care of by the backend developers to avoid forcing the frontend developers to install AEM locally.

Of course, this will also be the setup used on stages or on production.

AEM Frontend development

After understanding how the content comes to the SPA – how does the frontend code know how to render the content?

Similarly, how does the AEM editor know how to communicate with the SPA when the author changes content?

This is handled by the frontend libraries provided by Adobe for React and Angular.

Other frameworks are in consideration, but nothing was announced in regards to for example Vue or Svelte support. The only option in these cases would be to build a similar library.

These libraries contain functionality to parse the Content API output, instantiate the required components, fill the content properties and dynamically put them in the required order into the application context so that they are rendered on the page.

The same goes for other aspects like switching a page, routing and so on. Most of this works out of the box.

The main task the frontend developer has is to map the frontend components to the resource types of the backend Sling Models.

This will also enable the AEM editor to inform the frontend about which component needs to refetch its content when editing.

To demonstrate this in practice, consider the following standard angular component:

The component code has to be extended with the following:

This “maps” the frontend code to an AEM resource type which corresponds to a Sling Model which maps to the content in the repository.

Additionally, an “Edit Config” is provided to give hints to the AEM editor, for example when a component is considered “empty”, so that a placeholder can be displayed.

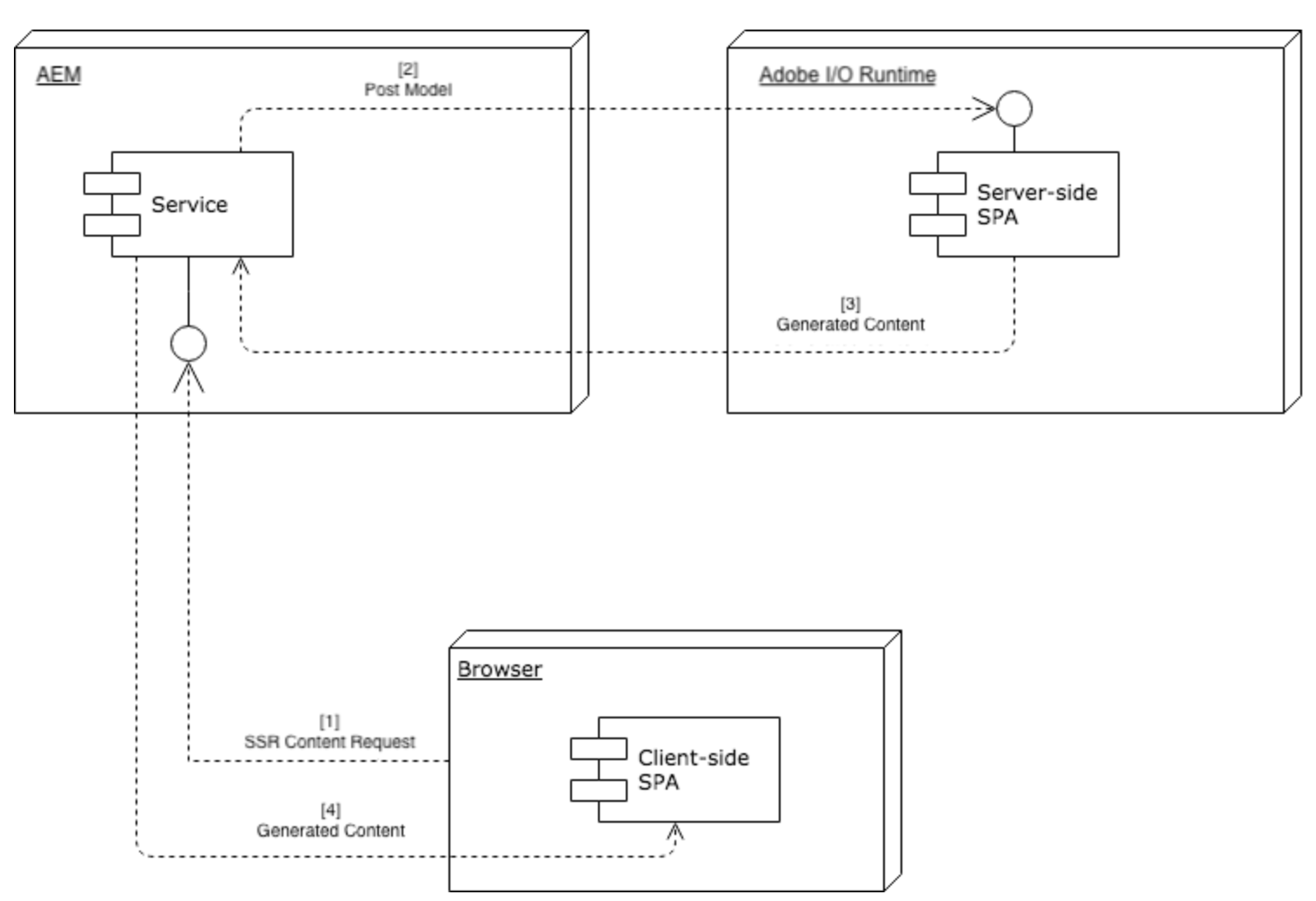

Server Side Rendering (SSR)

There are two major concerns when using the SPA approach: initial page loading speed and SEO.

Both can be solved by implementing SSR into the architecture. We also will shortly discuss how to actually implement this architecture (source: Adobe).

Initial page load issue with SPA

As discussed, with SPAs only an HTML document with an empty body is sent to the browser.

Therefore, the browser can initially not display any content and the user has to wait until the Javascript – which for SPAs is usually quite a lot compared to more traditional sites – is done loading, parsing and executing.

In the meanwhile, only a blank screen or a loading spinner is visible.

For a complex website like a banking application this is less of an issue since the users probably accept some loading time.

For a content-focused page where the user expects it to load within a couple hundred of milliseconds, this is usually not acceptable.

Server Side Rendering solves this by instead executing the Javascript in the backend in a headless browser or a NodeJS server and returning the populated initial HTML to the client.

The browser can directly start rendering the HTML and the users get immediate feedback . The browser will still fetch the Javascript and the SPA will inject itself into the rendered HTML (this is usually referred to as “rehydration”).

From there on, the execution flow continues as if the page was initially rendered in the browser. For more details and considerations we recommend this article by Google.

SEO and SPA

When a search engine crawls a SPA it will only see a blank HTML without content to parse. This can cause issues with SEO and ranking.

Some crawlers, like Google, support executing Javascript. Similar to SSR they will execute the Javascript to then crawl and index the content.

However, Google does not treat HTML and Javascript rendered pages equally. There are always two passes: first raw HTML and then processed Javascript.

These passes are not treated equally, the first pass which only reads and processes the HTML, has priority. The consequences of this can be a (much) longer timeframe until a page is indexed and ranks on Google.

Even though in the disadvantages regarding Google seem to be decreasing, not all search engines support executing Javascript.

There are also other contexts where non-SSR SPAs become problematic, for example generating a preview to share a page on social media.

Server Side Rendering in AEM

The groundwork and architectural pattern for SSR with AEM is proposed by Adobe and the recommendation is to use Adobe I/O to build the infrastructure.

We also heard that Adobe is working on an extended tutorial or possibly even reference implementation for SSR with Adobe I/O.

But for now this would have to be developed with a custom implementation on the basis of this sample code base.

How does Headless AEM work for clients that are not web-based?

So far this article focused on content-focused web pages or mobile hybrid SPAs.

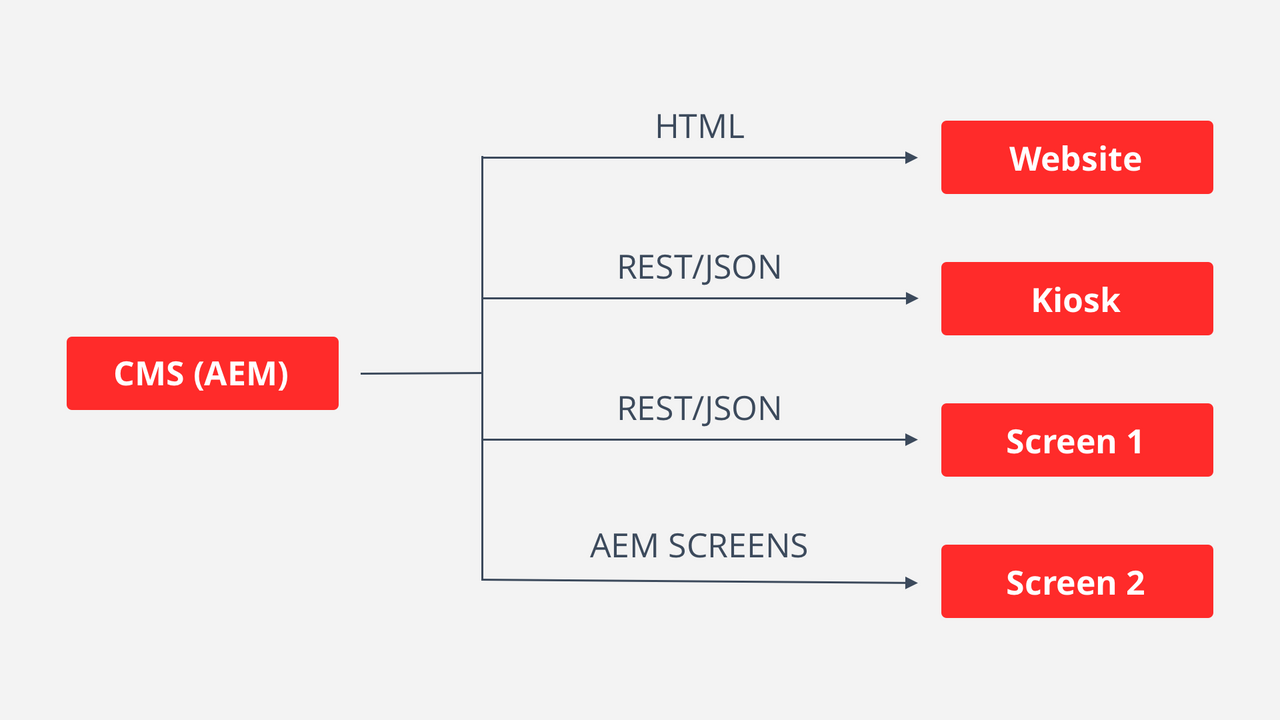

The headless capabilities of AEM and decoupling content from rendering HTML enables many more use cases and applications where content needs to be displayed from native Android or iOS Apps, Social Media Snippets, digital signage systems to small IOT devices.

To accommodate such a vast ecosystem, loosely structured web content is problematic.

However, the rich feature set of AEM also allows to create structured content according to a predefined model by using the “AEM Content Fragments” feature.

Content fragments are predefined form-based or simple rich text pieces of content which can be linked and structured. Other content from AEM like text, assets and tags can of course be reused in content fragments as well.

Fetching structured data with GraphQL

Recently AEM was extended to allow consuming content fragments with GraphQL (besides the already existing simpler JSON APIs).

In concept, GraphQL can be compared to a SQL database query, the difference being that the query is not used for a database but instead an API.

This allows different clients to query the API according to their own needs instead of the API having to provide different endpoints returning different amounts or sets of data for different clients.

For example, a smartwatch might want to display less content than the corresponding app and would query only what is needed without the backend having to support this use case.

Since GraphQL requires a predefined data structure it would not work that well with web content and content fragments were the obvious choice.

Adobe is working on GraphQL support and additional features like subscription, mutation and pagination are on its way. Due to the flexibility of the query possibilities, performance is a key topic for any GraphQL API.

Adobe plans to tackle this by using “persisted queries”.

A client will first “register” a query. AEM will give a handle for the query. This query handle can then be invoked with a simple GET call which can be cached, making any following query fast and scalable.

When to implement Adobe Experience Manager in a headless way

As discussed in a previous article about Headless CMS vs Hybrid CMS, you cannot go Headless at any price. There are some limitations and it might not be the best for every use case.

Let’s go through the pros and the cons of the headless approach.

The pros of the headless approach:

- Enables implementation of content-focused SPAs with dynamic pages, layouts and components (for web or hybrid mobile apps)

- Delivers a unique user experience for content pages achievable with SPAs (e.g. no page reload)

- Well known WYSIWYG authoring environment for SPAs

- “Full” integration for content focused SPAs, (upcoming) “light” integration for existing projects or limited content areas

- Content & Asset reuse – all content from one CMS

- Omnichannel delivery of content to any type of client from smart watches to digital signage to IOT

- Supported by Adobe (Editoring, Backed technology, frontend libraries, project setup)

- Separation of concern: AEM developers build the backend, frontend developers the frontend – no “integrating of markup” anymore

- Probably fewer AEM developers and more React / Angular frontend developers required, simplifies hiring

The cons of the headless approach:

- SSR setup required, especially for content focused web pages (initial page load & SEO)

- No clear guidance on how to implement SSR from Adobe (yet)

- Traditional approach well established, architectures with many successful projects scaled to large user bases

- Many unknowns: learning curve for developers, some bumps in the road are to be expected with any new technology

- Frontend developers still have to consider the AEM editor with some limitations e.g. no use of the CSS viewport height (vh) units

- Not all AEM features are supported (yet)

- Only Adobe support for Angular and React, no Vue or Svelte

From this we conclude the following three key points:

- SPAs for content focused web pages is now a valid approach that makes it possible to deliver new UX without any sacrifices in content creation with (almost) the full capabilities of AEM supported. This requires the implementation of an architecture with SSR which has to be custom built until there is direct guidance from Adobe.

- AEM is a fully capable headless CMS that can deliver content to any device or screen with modern technologies and standards (JSON API, GraphQL etc) which should be able to scale to large user bases due to performance optimisations by Adobe.

- Separation of concerns in regards to providing data and presenting data on the technical level means great improvements for the developer teams. No integration of markup. Frontend developers get the freedom they need and will be happier coders. Backend developers can focus on what they know best. A side benefit of this is that probably fewer AEM developers will be needed which are much more difficult to hire than React or Angular developers.

From these takeaways we can recommend AEM headless or hybrid to be considered when the following points are met:

- You aim to deliver the same experience and code base for a content-focused page on the web and a hybrid mobile app.

- You struggle to find enough AEM developers for web-based projects but have a strong team of frontend developers.

- You have an existing SPA and want to display content in limited areas of the up (wait for upcoming SPA editor 2.0).

- You want to deliver content from AEM to platforms that are not web technology based (headless).

Finally, is Hybrid CMS the best solution?

Yes, absolutely!

Hybrid CMS is the future, because it makes possible to keep the established traditional approaches while being able to deliver content to any other device or platform, all from a single, consistent user interface using modern technologies like SPAs and GraphQL.

A single platform for all your content also means reusing content across all platforms!

Text, assets, tags, Content Fragments, Experience Fragments – all can be reused on your traditional site, SPA, native iOS or Android App, digital signage (AEM Screens) or on a toaster with a display.

Even better, through the tight integration of AEM into the Adobe Experience Cloud, content can be further reused in emails (Adobe Campaign or Marketo), personalisation (Adobe Target) and many more tools and technologies.

For web technology based projects, it also allows you to split the teams according to the separation of concern.

This means that developers can focus on what they know and do best. Plus, hiring might be simpler because potentially less AEM developers will be needed.

The drawback? All this technology is quite new and there are no established best practices yet, so there naturally are some unknowns and risks for new projects.

Basil Kohler

AEM Architect

The post Headless CMS with AEM: A Complete Guide appeared first on One Inside.

(source:

(source: