12 Schritte für eine erfolgreiche Migration von AEM On-Premise zur AEM Cloud

Nutzt Ihre Organisation das volle Potenzial des Adobe Experience Managers (AEM), um Ihren Kunden hervorragende digitale Erlebnisse über diverse Kanäle hinweg anzubieten?

Falls Sie Ihre Webseiten momentan mit einer AEM-Lösung auf eigenen Servern betreiben oder die Managed Services von Adobe in Anspruch nehmen, ist jetzt der ideale Zeitpunkt für den Schritt in die Cloud.

Im Jahr 2020 hat AEM eine neue Ära des Content-Management-Systems (CMS) mit AEM as a Cloud Service eingeläutet. Für Sie und Ihr Unternehmen ist es nun an der Zeit, sich auf diesen wichtigen Wandel vorzubereiten und die Vorteile dieses fortschrittlichen CMS in der Cloud zu nutzen.

Bei One Inside – A Vass Company haben wir umfangreiche Erfahrungen gesammelt, indem wir zahlreichen namhaften Firmen bei ihrem Wechsel von lokalen Lösungen in die Cloud zur Seite standen. Wir haben reibungslose Übergänge auf die AEM Cloud innerhalb von weniger als drei Monaten realisiert.

In diesem detaillierten Leitfaden teilen unsere AEM-Spezialisten ihr Fachwissen zu folgenden Themen:

- Wie gelingt der nahtlose Übergang von AEM On-Premise zur AEM Cloud?

- Welche Schlüsselschritte sind für eine erfolgreiche Migration in die AEM Cloud entscheidend?

- Welche häufigen Stolpersteine gilt es zu vermeiden?

Bevor wir uns jedoch den einzelnen Migrationsschritten zuwenden, lohnt es sich, die überzeugenden Vorteile der AEM Cloud näher zu betrachten.

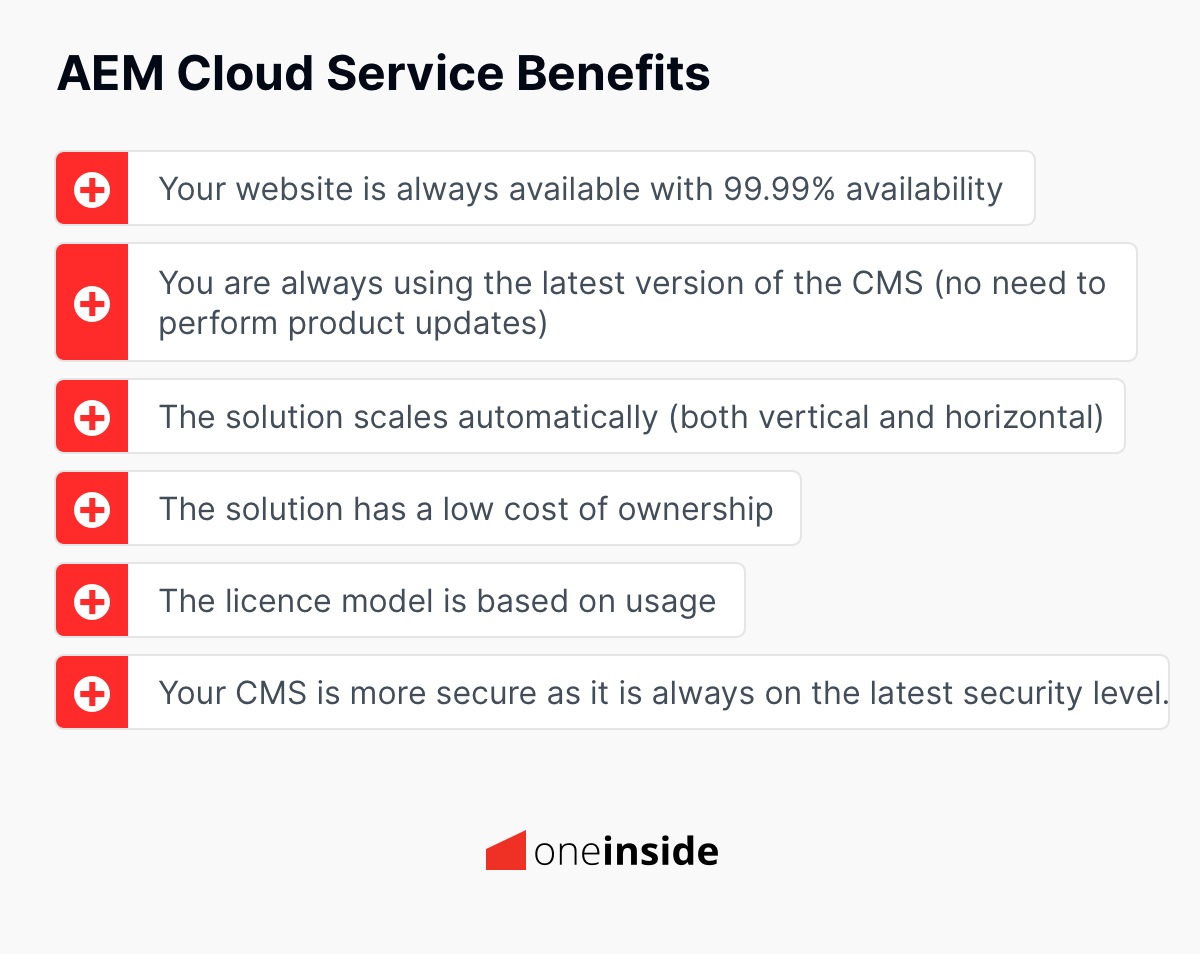

Welche Vorteile bringt der Umstieg auf AEM Cloud mit sich?

Für jedes zukunftsorientierte Unternehmen ist es essenziell, die offensichtlichen Vorteile eines Wechsels seiner AEM-Systeme in die Cloud hervorzuheben, um die Unternehmensführung von einem solchen Schritt zu überzeugen.

Lassen Sie uns die Gründe betrachten, warum dieser Schritt für Ihr Unternehmen von Bedeutung sein könnte.

Der Umstieg von AEM On-Premises oder Managed Services auf AEM Cloud bringt eine Fülle von Vorteilen mit sich, einschliesslich:

Reduzierte Betriebskosten und ein mittelfristiger Return on Investment (ROI)

Die Gesamtbetriebskosten werden durch die Nutzung von AEM Cloud signifikant gesenkt. Verschiedene Bereiche, in denen Ihr Unternehmen Einsparungen erzielen kann, umfassen:

- Lizenzen: Durch ein nutzungsbasiertes Preismodell können sich die Lizenzkosten verringern. Zudem bietet der Wechsel in die Cloud eine Chance, neue Preisverhandlungen mit Adobe zu führen.

- Betriebskosten: AEM Cloud vereinfacht viele Betriebsprozesse, wie die Umgebungsverwaltung und die automatischen Updates.

- Infrastruktur und Hosting: Wenn Sie AEM bisher intern gehostet haben, werden Sie erhebliche Kosteneinsparungen bei Infrastruktur und Hosting erzielen, da die Instandhaltungskosten wegfallen.

- Personal: Die Anzahl der benötigten Vollzeitkräfte wird sich verringern, was zu weiteren Einsparungen führt.Trotz der anfänglichen Ausgaben für das Migrationsprojekt hat unser Team den Übergang auf AEM Cloud in unter drei Monaten erfolgreich vollzogen.

Trotz der anfänglichen Kosten des Migrationsprojekts, konnte unser Team den Umzug auf AEM Cloud in weniger als drei Monaten vollziehen.

Der Zeitrahmen variiert je nach Integrationskomplexität sowie der Anzahl der betroffenen Websites und Domains.

Unsere Untersuchungen zeigen, dass der Return of Investment (ROI) für ein solches Projekt in der Regel bei unter drei Jahren liegt, was die Migration zu einer wertvollen Investition macht.

Sie haben immer Zugriff auf die neusten Funktionen, weil Ihr CMS stets auf dem neuesten Stand ist.

Mit AEM als Cloud Service entfallen kostspielige Versions-Upgrades. Adobe sorgt für die automatische Aktualisierung des CMS mit den neuesten Funktionen und macht damit das Konzept traditioneller Versionsnummern obsolet.

Sie arbeiten immer mit der aktuellsten Version, ähnlich wie bei anderen SaaS-Lösungen.

Erhöhte Sicherheit

Sicherheit ist besonders für grosse Unternehmen ein kritisches Anliegen, und AEM als Cloud-Service bietet möglicherweise mehr Sicherheit als Ihre gegenwärtige Lösung.

Die Cloud-Lösung wird kontinuierlich überwacht und Patches werden umgehend eingespielt, sobald Sicherheitslücken entdeckt werden.

Für detaillierte Informationen zur Sicherheit der Adobe Cloud-Services lesen Sie bitte das entsprechende Sicherheitsdokument von Adobe.

99,9% Betriebszeit

AEM Cloud garantiert, dass Ihre Website ständig erreichbar ist.

Diese Lösung ist darauf ausgelegt, horizontal und vertikal effizient zu skalieren, um ein hohes Serviceniveau aufrechtzuerhalten und auch bei Spitzenlasten zuverlässig zu funktionieren.

Keine Lernkurve für Ihr Marketingteam

Ein weiterer Vorteil der Migration auf AEM Cloud ist, dass Ihr Marketingteam mit dem Tool vertraut sein wird. Trotz wesentlicher Änderungen an der Architektur und den Prozessen bleibt die Benutzererfahrung für den Endanwender gleich.

Editoren von jeglichen Inhalten werden nach der Migration keine Unterschiede feststellen, was bedeutet, dass keine zusätzlichen Schulungen oder Anpassungen erforderlich sind.

Fokus auf Innovation und schnellere Markteinführung

Das Verwalten eines Enterprise-CMS wie in alten Zeiten ist nicht mehr zeitgemäss. Ihre Organisation sollte sich der neuen Gegebenheiten annehmen.

Die AEM Cloud ermöglicht eine Beschleunigung der Innovationskraft aus mehreren Gründen:

- Ihr Team kann sich ganz auf wertschöpfende Projekte konzentrieren.

- Sie profitieren von den neuesten Adobe-Innovationen.

Durch unsere umfassende Erfahrung mit der AEM Cloud und die Zusammenarbeit mit verschiedenen Kunden konnten wir eine deutlich verkürzte Markteinführungszeit beobachten. Projekte werden zügig abgeschlossen und neue Webseiten können innerhalb weniger Monate an den Start gebracht werden.

Wenn Ihr Unternehmen ein neues Produkt oder eine neue Dienstleistung präsentieren möchte, wird es die Vorteile des Einsatzes dieses CMS der neuesten Generation zu schätzen wissen.

Schrittweiser Übergang von AEM On-Premise zu AEM Cloud-Service

In diesem Abschnitt begleiten wir Sie auf dem Weg von Ihrer lokalen AEM-Installation hin zu AEM als Cloud-Service.

Jede Phase dieses Prozesses wurde sorgsam ausgearbeitet, um sicherzustellen, dass der Wechsel in die Cloud problemlos und erfolgreich verläuft. Dabei werden alle entscheidenden Punkte von der initialen Analyse bis hin zum operativen Betrieb berücksichtigt.

Schritt 1 – Analyse, Planung und Aufwandsschätzung

Der Auftakt dieses Unterfangens umfasst das Verständnis für AEM als Cloud-Service, inklusive der damit einhergehenden Neuerungen und der auslaufenden Features. Zu den nennenswerten Änderungen gehören unter anderem:

- Anpassungen der Architektur mit automatischer horizontaler Skalierbarkeit

- Anpassungen in der Projektcode-Struktur

- Verwaltung digitaler Assets

- Integriertes CDN (Content Delivery Network)

- Konfiguration der Dispatching-Systeme

- Netzwerk- und API-Anbindungen, inklusive IP-Whitelisting

- Einrichtung von DNS- und SSL-Zertifikaten

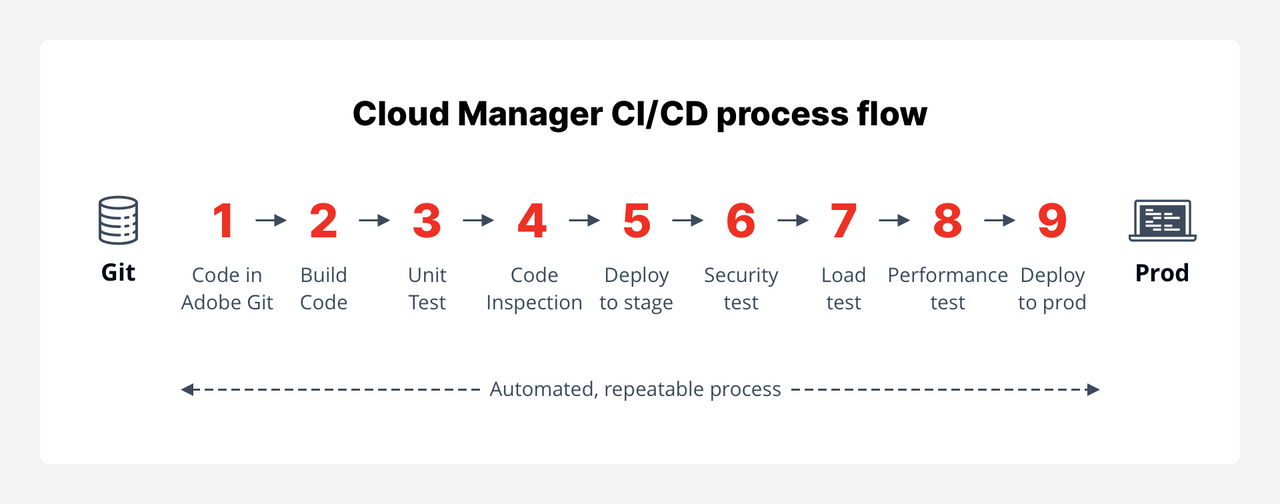

- Einrichtung und Verwaltung von CI/CD-Pipelines

- Zugriff für AEM-Editoren via Adobe-Konto

- Definition von Benutzergruppen und Zugriffsrechten

Zudem ist es von grosser Bedeutung, Ihre aktuelle AEM-Struktur eingehend zu prüfen, insbesondere in Bezug auf Schnittstellen und Verknüpfungen mit anderen Systemen:

- APIs oder interne Netzwerkschnittstellen

- Services von Drittanbietern, vor allem jene, die durch IP-Whitelisting geschützt sind

- Sämtliche Datenimport-Dienste für AEM

- Zugangssysteme mit eingeschränkten Nutzerkreisen (Closed User Groups, CUG)

Diese Komponenten erfordern eine genaue Prüfung, da Anpassungen nötig sein könnten.

Ein weiterer zentraler Punkt ist die effiziente Kommunikation mit allen aktuellen Stakeholdern, Kooperationspartnern und dem Adobe-Team. Eine frühzeitige Einbindung dieser Gruppen mit klaren Aufgaben und Zeitplänen ist für das Gelingen des Projekts ausschlaggebend.

Es wird beispielsweise notwendig sein, Ihr internes IT-Team zu involvieren. Dessen frühestmögliche Information ist entscheidend, um Verzögerungen im Projektverlauf zu vermeiden.

Überdies ist es unabdingbar, Ihre Lizenzabkommen mit Adobe zu prüfen und zu bestätigen, dass Sie über die passenden Lizenzen für AEM als Cloud-Service verfügen.

Diese Abkommen regeln die Vereinbarungen bezüglich Cloud-Hosting, Datenschutz und Support. Nun ist der Moment gekommen, in dem Sie auch sämtliche Verträge mit Subunternehmern überprüfen sollten. Gibt es eine Support-Vereinbarung mit Service-Level-Agreements (SLA) mit einem Zulieferer? Wer ist für die Einrichtung unserer Ticketing- und Dokumentationssysteme zuständig? Diese Abkommen sollten nun überprüft und an die neue Cloud-Struktur angepasst werden. Es ist essentiell, dass jeder seinen Bereich kennt, der Umfang der Arbeit klar definiert ist und dass die Arbeitsabläufe reibungslos funktionieren.

Obwohl diese erste Etappe nur einige Tage in Anspruch nimmt, ist sie doch entscheidend für die Einschätzung von kritischen Aspekten Ihrer bestehenden Infrastruktur, die Definition des Projektplanes und des Aufwandes sowie für die Informationsweitergabe an alle Hauptakteure.

Schritt 2 – Vorbereitung des Codes für den Einsatz von AEM als Cloud-Service

In dieser Phase geht es darum, die Codebasis Ihrer aktuellen AEM-Installation für den Betrieb in der Cloud vorzubereiten, wobei zugleich die Kompatibilität mit bestehenden On-Premise-Systemen gewährleistet werden soll.

Obwohl wir in diesem Beitrag nicht auf sämtliche strukturelle Änderungen eingehen, die für die Nutzung von AEM in der Cloud notwendig sind, möchten wir Ihnen einen verständlichen Überblick bieten.

Adobe stellt hierfür ein nützliches Werkzeug zur Verfügung, den Adobe Best Practices Analyzer. Dieses Instrument dient dazu, Ihre AEM-Umsetzung zu bewerten und empfiehlt Anpassungen gemäss den Best Practices und Standards von Adobe. Der Bericht des Analyzers beinhaltet unter anderem:

- Anwendungsfunktionen, die überarbeitet werden müssen.

- Repository-Elemente, die an unterstützte Orte verschoben werden müssen.

- Überholte Dialoge und UI-Komponenten, die modernisiert werden sollten.

- Herausforderungen bei der Implementierung und Konfiguration.

- Funktionen von AEM 6.x, die entweder durch neue ersetzt wurden oder in der aktuellen Cloud-Version von AEM nicht unterstützt werden.

Es ist ratsam, dass ein AEM-Experte den Bericht des Adobe Best Practices Analyzers durchgeht, da dieser möglicherweise nicht alle Aspekte der Codebasis und deren Implikationen vollständig abdeckt.

Anschliessend sollte ein AEM-Architekt oder Entwickler die Codebasis entsprechend umstrukturieren und an die Praktiken der neuesten AEM-Archetypen angleichen.

Es empfiehlt sich auch, ein Refactoring älterer Funktionen Ihrer Codebasis vorzunehmen.

In Anbetracht der Tatsache, dass zu einem späteren Zeitpunkt umfangreiche Tests der Website und der Anwendungen anstehen, bietet es sich an, diese Gelegenheit zu nutzen, um technische Altlasten zu bereinigen und eine robustere Basis für die Zukunft zu schaffen.

Schritt 3 – Einrichten der AEM-Cloud-Umgebungen

Ziel dieses Schrittes ist es, die Cloud-Umgebung vorzubereiten und den AEM Cloud Manager zu konfigurieren, der das Herzstück von AEM als Cloud Service darstellt. Wichtig ist, dass dieser Schritt parallel zum vorherigen erfolgen kann.

Der Adobe Cloud Manager bietet eine benutzerfreundliche Oberfläche, die es vereinfacht, Umgebungen einzurichten, Pipelines zu konfigurieren sowie Zertifikate, DNS und weitere wesentliche Dienste zu verwalten.

Bitte beachten Sie diesen wichtigen Hinweis: Um Zugriff auf den AEM Cloud Manager und die erforderlichen Dienste zu erhalten, müssen Sie zunächst eine Lizenzvereinbarung mit Adobe abschliessen. Beginnen Sie frühzeitig Gespräche mit Ihrem Adobe-Kundenbetreuer, um Verzögerungen in dieser Phase zu vermeiden.

Schritt 4 – Überführung Ihrer Projekte in die AEM Cloud

Bis zu diesem Punkt wurde Ihr Code bereits überarbeitet und jegliche Inkompatibilitäten wurden identifiziert und so angepasst, dass diese Cloud-tauglich sind.

Zusätzlich sind alle erforderlichen Umgebungen (Test, Staging, Produktion) eingerichtet und bereit, Ihren Code aufzunehmen.

Dieser Schritt gestaltet sich relativ einfach und beinhaltet das Übertragen Ihres Codes ins Cloud-Git-Repository. Während dieser Phase und bis zur endgültigen Inbetriebnahme empfiehlt es sich, Neuerungen nicht mehr zuzulassen (Feature Freeze).

Sollten jedoch Änderungen in Ihrer Produktionsumgebung unabdingbar sein oder kritische Anpassungen an Ihrer On-Premise-Installation notwendig werden, ist es möglich, den Code später in die Cloud zu übertragen.

Bei One Inside haben wir Erfahrung mit solchen Szenarien gesammelt. Es ist jedoch wichtig zu verstehen, dass das Aussetzen von Neuerungen dabei helfen kann, Risiken wie Projektverzögerungen und eine erhöhte Komplexität zu minimieren.

Schritt 5 – Integrationstest mit Kernsystemen oder externen APIs

Es ist sehr wahrscheinlich, dass Ihre Webseite Daten von Drittanbieterdiensten oder internen Anwendungen nutzt. Um eine nahtlose Einbindung dieser Dienste zu gewährleisten, müssen spezifische Netzwerkeinstellungen im Cloud Manager vorgenommen werden.

Darüber hinaus bietet AEM als Cloud Service eine statische IP-Adresse, die auf Ihrer Seite freigegeben werden muss, um die Konnektivität mit Ihren internen Anwendungen zu ermöglichen.

Dieser Schritt ist entscheidend, um eine sichere und störungsfreie Verbindung zwischen Ihrer AEM-Cloud-Umgebung und den Kernsystemen oder externen APIs zu etablieren.

Schritt 6 – Integration von Adobe Target, Adobe Analytics und der Adobe Experience Cloud Suite

Da Sie AEM bereits für Ihre Websites nutzen, ist es wahrscheinlich, dass Sie sich auch auf andere Lösungen innerhalb der Adobe Experience Cloud Suite verlassen, einschliesslich Adobe Analytics und Adobe Target.

Die Einbindung dieser Lösungen gestaltet sich in der Regel unkompliziert und sie sollten reibungslos in Ihre Webseiten integriert werden können.

Durch die bereits bestehende Nutzung von AEM ist es einfacher, die Integration auf weitere Komponenten der Adobe Experience Cloud auszudehnen und so Ihre Fähigkeiten zur Analyse und Optimierung Ihrer digitalen Erlebnisse zu verbessern.

Schritt 7 – Inhaltsmigration

Die Übertragung von Inhalten stellt zwar einen wesentlichen Schritt dar, sollte aber keine übermässigen Sorgen bereiten, da die Struktur der Inhalte auf Ihrer bestehenden Webseite und der neu aufgesetzten AEM Cloud-Instanz gleichbleibt.

Betrachten Sie diesen Vorgang als Umzugsprozess Ihrer Inhalte, vergleichbar mit der Übertragung von einer Staging- in eine Produktionsumgebung.

Zudem stellt Adobe spezielle Werkzeuge zur Verfügung, die den Migrationsprozess vereinfachen, wie zum Beispiel das Content Transfer Tool für die Überführung bestehender Inhalte von AEM On-Premise in die Cloud und den Package Manager, welcher den Import und Export von Inhalten im Repository erleichtert.

Die Inhaltsmigration umfasst mehr als nur die Webseiten selbst; es geht um das gesamte Spektrum der in Ihrem Repository vorhandenen Daten, einschliesslich:

- Seiteninhalte

- Assets

- Nutzer- und Gruppeninformationen

Da es möglich ist, während der Migration weiterhin Inhalte auf Ihrer Live-Webseite zu erstellen, ermöglicht das Tool ein gezieltes Update. So können Sie ausschliesslich jene Änderungen übertragen, die seit der letzten Inhaltsmigration durchgeführt wurden, was einen effizienten und aktuellen Übergang sicherstellt.

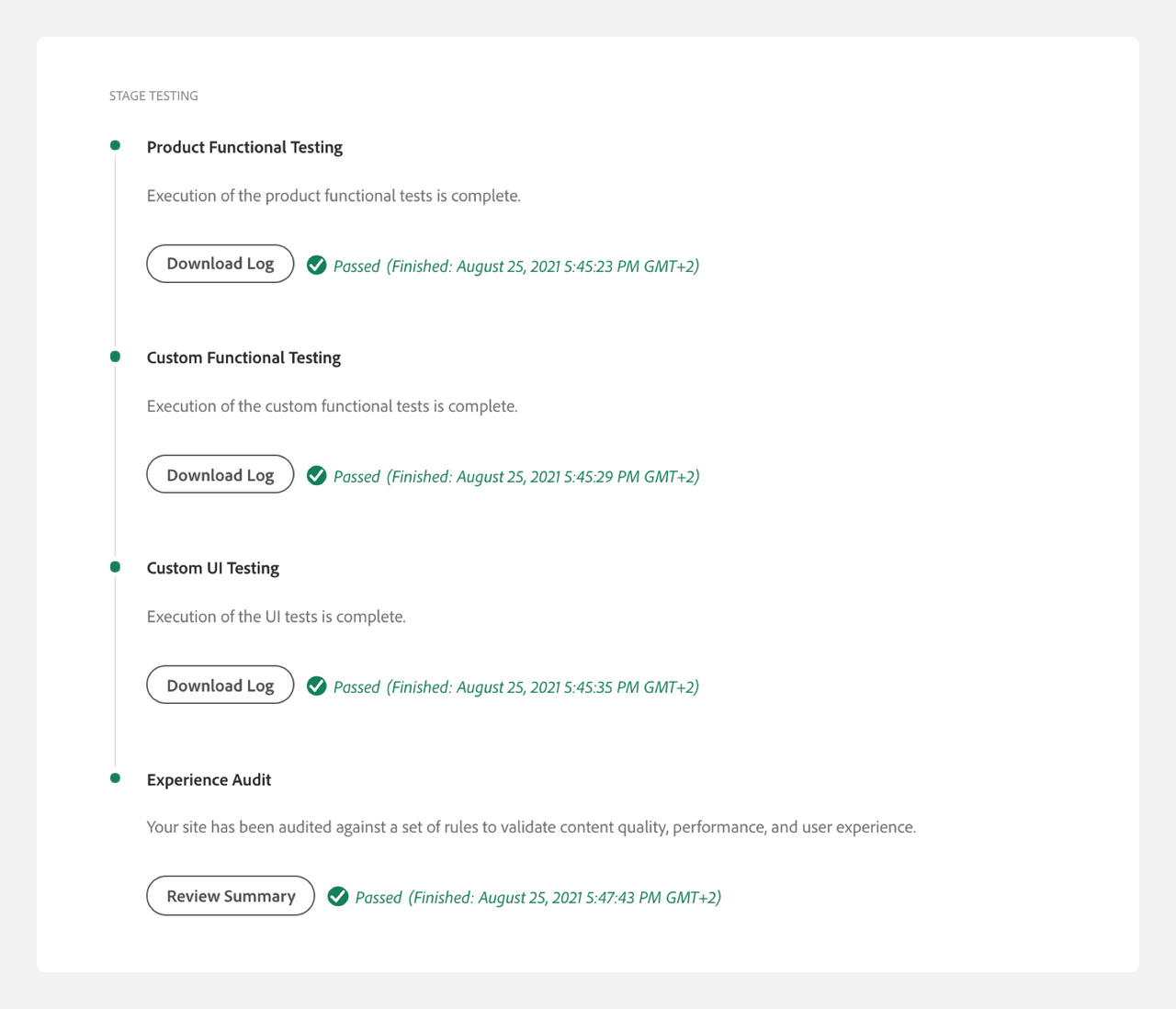

Schritt 8 – Testen, testen, testen

Wir bewegen uns auf die letzten Etappen der Migration zu. Obwohl bereits in früheren Phasen Tests stattgefunden haben, ist es jetzt Zeit für eine ausgiebige User Acceptance Testing (UAT)-Phase.

Ihr spezialisiertes Testteam sowie die Endnutzer sollten aktiv an dieser entscheidenden Phase beteiligt sein. Eine ausgearbeitete Teststrategie ist vonnöten, bevor das UAT beginnt.

Die Einbindung der Content-Ersteller in den Testprozess erfüllt mehrere Funktionen: Sie gewöhnen sich nicht nur schneller an die neue Umgebung, sondern bringen auch ihr tiefes Verständnis für die Funktionsweise der Komponenten ein. Ihr Feedback, ihre Kenntnisse und ihre Unterstützung sind ausschlaggebend, um die Einzigartigkeit Ihrer digitalen Präsenz zu wahren.

Durch umfassendes Testen wird sichergestellt, dass der Übergang zur AEM Cloud erfolgreich verläuft und Ihre Webseite in der neuen Umgebung ohne Schwierigkeiten funktioniert.

Schritt 9 – Umleitung der Domain

Dieser Schritt bildet die Voraussetzung für die Inbetriebnahme und erfordert das geschickte Management Ihres IT-Netzwerkteams.

Dieses kümmert sich um die Verwaltung von Zertifikaten, DNS-Konfigurationen und die Umschaltung der Domain.

Wie bereits am Anfang dieses Leitfadens hervorgehoben wurde, ist es entscheidend, dass Ihre IT-Verantwortlichen von Beginn an über diese wesentlichen Schritte im Bilde sind und entsprechende Aufgaben übernommen haben.

Sie sollten gut vorbereitet sein, um zu wissen, was in dieser Phase zu tun ist, da die Vorbereitungen hierfür schon seit Wochen laufen.

Eine effiziente Koordination in dieser Phase ist essenziell, um Verzögerungen im Gesamtprojekt und bei der Umstellung zu vermeiden. Eine reibungslose Domain-Umleitung sichert die nahtlose Überführung Ihrer Webseite in die AEM Cloud-Umgebung.

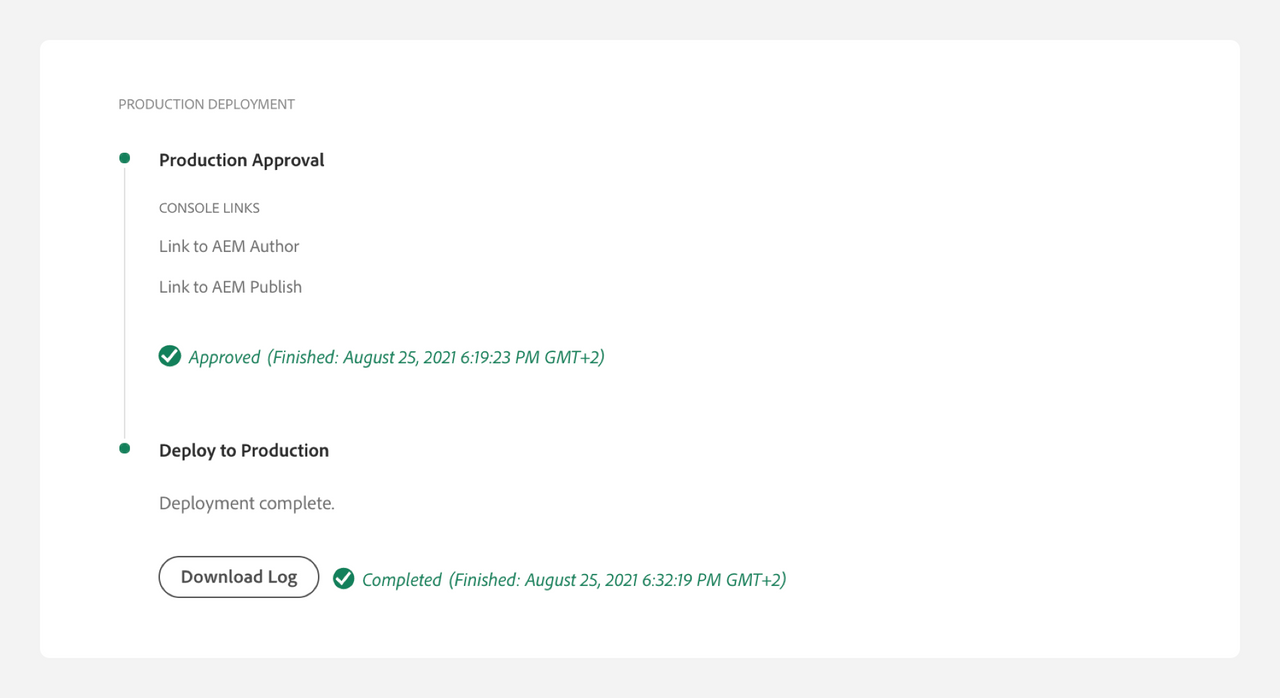

Schritt 10 – Das Go-Live

Dieser Abschnitt könnte als der herausforderndste erscheinen, doch paradoxerweise ist er oft der einfachste.

Ihre Webseite hat umfangreiche Tests durchlaufen und funktioniert einwandfrei in der Cloud. Jetzt ist es an der Zeit für den endgültigen Wechsel von Ihrer lokalen AEM-Installation zur Cloud-Version.

Für die Nutzer Ihrer Seite wird der Übergang nahtlos verlaufen, ohne jegliche Unterbrechungen. Bei gewissenhafter Planung und Durchführung markiert dieser Schritt den erfolgreichen Abschluss Ihrer Migration in die AEM Cloud.

“Die Migration zur AEM Cloud ist eine große Zufriedenheitsquelle sowohl für die Geschäfts- als auch für die IT-Verantwortlichen, die Website aktiv in der Cloud laufen zu sehen, in eine neue Ära besserer Leistung und spannender Möglichkeiten einzutreten, um das Kundenerlebnis zu verbessern”

Martyna Wilczynska

Project Manager at One Inside – A VASS Company

Schritt 11 – Schulung

Eine umfassende Schulung Ihrer Inhaltsverantwortlichen ist nicht notwendig, da die Benutzeroberfläche unverändert bleibt.

Dennoch wurde mit der Migration in die Cloud ein neues, bedeutendes Werkzeug – der Adobe Cloud Manager – eingeführt.

Ihre IT- oder DevOps-Teams sollten in der Lage sein, dieses Tool zu verwalten, alternativ kann die Wartung Ihrer Webseite an einen Adobe-Partner übertragen werden.

Wir bei One Inside bieten Trainings an, um sicherzustellen, dass Ihr IT-Personal mit den notwendigen Fähigkeiten ausgestattet ist, um wichtige Aufgaben wie die Verwaltung von SSL-Zertifikaten, Domain-Verlinkungen, Whitelistings und Account-Management zu übernehmen.

Schritt 12 – Stilllegung der On-Premise-Instanz

Abschliessend empfehlen wir, Ihre lokale Server-Instanz noch für einen Zeitraum von zwei bis vier Wochen nach der Migration aktiv zu lassen. Diese Vorsichtsmassnahme dient als Sicherheitsnetz, falls Sie aus irgendeinem Grund auf die lokale Version zurückgreifen müssen.

Obwohl eine Rückkehr zur On-Premise-Instanz nach unserer Erfahrung selten erforderlich ist, ist es klug, sich gegen dieses potenzielle Risiko abzusichern. Nachdem die intensive Betreuungsphase abgeschlossen ist, können Sie sich voll und ganz auf Ihre neue AEM als Cloud-Service-Instanz verlassen, wissend, dass ein Notfallplan bereitsteht.

Erfahrungen und Best Practices aus der Migration zu AEM als Cloud-Service

Unser Team hat durch mehrere erfolgreiche Migrationen zu AEM als Cloud-Service wertvolle Erfahrungen gesammelt. Wir möchten Ihnen einige bewährte Methoden vorstellen, die dazu beitragen können, das Risiko bei Ihrem Migrationsprojekt zu minimieren.

Starten Sie mit einer detaillierten Analyse

Es ist empfehlenswert, Ihr Projekt zur Cloud-Migration mit einer gründlichen Analyse zu beginnen. Vermeiden Sie eine vorschnelle Beurteilung Ihrer bestehenden AEM-On-Premise-Installation. Eine sorgfältige Bewertung von Abhängigkeiten und zu überarbeitenden Elementen ist unerlässlich.

Falls Sie erstmalig eine Migration durchführen, investieren Sie genügend Zeit in die Recherche und Dokumentation für ein solches Vorhaben. Selbst wenn Sie ein internes Team haben, das sich um AEM kümmert, ist die Hinzuziehung eines erfahrenen Adobe-Partners empfehlenswert. Deren Expertise kann massgeblich zum Erfolg Ihrer Migration beitragen.

Stakeholder-Abhängigkeiten managen

Es ist ausschlaggebend, die Abhängigkeiten der Projektbeteiligten frühzeitig zu berücksichtigen. Verschiedene Mitglieder Ihrer Organisation werden bei wichtigen Meilensteinen des Projekts eine zentrale Rolle spielen.

Wir haben die Bedeutung des IT-Teams bei der Netzwerkverwaltung bereits hervorgehoben, aber auch andere Gruppen können involviert sein, z.B. Sicherheit und Qualitätssicherung.

Zu Beginn des Projekts ist es wichtig, diesen Teams klare Erwartungen zu kommunizieren und genaue Termine für ihre Mitwirkung festzulegen. Ein solch proaktiver Ansatz hilft, Verzögerungen zu vermeiden und gewährleistet einen reibungslosen Projektverlauf.

Über den Rahmen eines typischen Scrum-Projekts hinaus

Es mag überraschen, aber ein Cloud-Migrationsprojekt unterscheidet sich deutlich von einem herkömmlichen, nach Scrum organisierten IT-Projekt.

Normalerweise fokussieren wir uns darauf, schnellstmöglich den höchstmöglichen Wert zu liefern und suchen dabei kontinuierlich das Feedback des Kunden.

Bei einem Cloud-Migrationsprojekt liegt der Schwerpunkt jedoch auf der Überarbeitung des Backend-Codes, der erst in den finalen Phasen präsentiert werden kann, wenn sich die Webseite bereits in der Cloud-Testumgebung befindet. Das in uns gesetzte Vertrauen der Kunden motiviert uns, aussergewöhnliche Ergebnisse zu erzielen.

Regelmässige Meetings mit Team und Stakeholdern

Angesichts eines straffen Zeitplans von drei Monaten ist es unerlässlich, regelmässige Updates mit Ihrem Team und den Schlüsselakteuren durchzuführen. Wir empfehlen dringend, wöchentliche Besprechungen einzuplanen, um den Fortschritt zu überwachen, Risiken zu identifizieren und Gegenmassnahmen zu planen.

Bei diesen wöchentlichen Meetings sollten Sie besonders auf die Interaktionen mit anderen Teams achten und den Fortschritt ihrer Tätigkeiten bewerten. Ein solcher proaktiver Ansatz gewährleistet, dass alle Beteiligten im Einklang arbeiten und schnell auf veränderliche Anforderungen des Projekts reagieren können.

“Klare Kommunikation mit den Kunden ist entscheidend für die Risikominderung, die Identifizierung von Problemen und das Aktualisieren des Fortschritts während der Migration. Es verringert den Stress der Kunden und gewährleistet Transparenz in ihrer digitalen Reise.”

Michael Kleger

Project Manager at One Onside

Partnerschaft mit Adobe

Der Zugang zur Cloud-Umgebung setzt Verhandlungen über die Lizenzierung voraus. Es ist ebenso entscheidend, mit Ihrem Adobe-Kundenbetreuer zu diskutieren, ob ein Standby-Server als temporäre Sicherheitslösung lokal verbleiben kann.

Unsere Erfahrungen zeigen, dass frühzeitige Gespräche zu einem möglichst vorteilhaften und flexiblen Wechsel von der On-Premise-Infrastruktur beitragen.

Zudem bietet Adobe Unterstützung bei eventuell auftretenden Problemen. Es kann vorkommen, dass einige Funktionen nach der Anpassung für die Cloud nicht wie erwartet funktionieren.

Um schnelle Unterstützung vom Adobe-Support zu erhalten, ist eine enge Zusammenarbeit mit einem Adobe-Partner, der eine gute Beziehung zu Adobe pflegt, vorteilhaft.

Bei One Inside beispielsweise unterhalten wir seit über einem Jahrzehnt eine enge Partnerschaft mit Adobe. Unsere Nähe zum AEM-Entwicklungsteam, das für AEM als Cloud-Service zuständig ist, erweist sich oft als unschätzbar wertvoll.

Die langjährige Verbindung zu Adobe und seinen talentierten Mitarbeitern erleichtert es uns, effiziente Lösungen zu finden, da wir direkte Ansprechpartner haben und nicht durch mehrere Support-Ebenen navigieren müssen.

Entwicklungen auf der On-Premise-Instanz während der Migration vermeiden

Verzichten Sie möglichst auf neue Entwicklungen auf Ihren Live-Webseiten während der Migration. Dies verhindert viele potenzielle Probleme.

Wir wissen, dass ein dreimonatiger Code-Freeze oft nicht praktikabel ist. Um mögliche Schwierigkeiten zu minimieren, sollten Sie aber sicherstellen, dass der Code in beiden Umgebungen synchronisiert und für die Cloud optimiert wird, bevor weitere Verbesserungen an Ihrer lokalen Installation vorgenommen werden. Diese Koordination reduziert Komplikationen während des Migrationsprozesses.

Gelegenheit zur Verbesserung nutzen

Der Migrationsprozess bietet die Chance, Ihre Webseite umfassend zu testen. Nutzen Sie diese Gelegenheit, um verschiedene Aspekte Ihrer Webseite zu verbessern, wie die Architektur, das Code-Refactoring und kleinere Designanpassungen.

In unseren Migrationsprojekten haben wir erfolgreich Verbesserungen wie Bildgenerierung, Frontend-Optimierungen und Leistungssteigerungen durchgeführt. Dieses Zeitfenster ermöglicht Ihnen nicht nur den Wechsel in die Cloud, sondern auch die Verbesserung der Gesamtqualität und Funktionalität Ihrer Webseite.

Schlussfolgerungen für Ihre Migration zu AEM als Cloud-Service

Abschliessend lässt sich sagen, dass die Migration zu AEM als Cloud-Service eine transformative Reise darstellt, die eine sorgfältige Planung und Ausführung erfordert.

AEM Cloud-Service repräsentiert die Zukunft von AEM, und diese Migration legt dafür den Grundstein.

In diesem Artikel haben wir wertvolle Einblicke und Best Practices aus erfolgreichen AEM Cloud-Migrationen geteilt. Von der Analyse von Abhängigkeiten bis hin zur Pflege einer soliden Beziehung zu Adobe, von regelmässigen Team-Updates bis zur Optimierung von Designfehlern – all diese Erkenntnisse können Ihnen zu einer erfolgreichen Migration verhelfen.

Stellen Sie sich den Herausforderungen und Chancen des Wechsels in die Cloud und bedenken Sie, dass eine gut durchgeführte Migration zu einer effizienteren, sichereren und innovativeren digitalen Erfahrung für Ihr Unternehmen und seine Nutzer führen kann. Mit der richtigen Strategie und der Unterstützung durch erfahrene Partner können Sie diesen Weg sicher beschreiten und ausgezeichnete Ergebnisse erzielen.

Wir möchten unsere Dankbarkeit gegenüber den talentierten Personen in unserem Unternehmen zum Ausdruck bringen, die zu diesem Artikel beigetragen haben, einschließlich Martyna Wilczynska, Basil Kohler, Michael Kleger und Samuel Schmitt.

Samuel Schmitt

Digital Solution Expert

The post 12 Schritte für eine Migration von AEM On-Premise zur AEM Cloud appeared first on One Inside.

(source:

(source: